In the first six months of 2025, TikTok took down 7,464,081 videos in Nigeria for violating its community guidelines. That is, 3,683,655 between January and March and another 3,780,426 between April and June. To put that in perspective, that’s over 41,000 videos removed every single day, or roughly 1,700 videos every hour.

The numbers, released in TikTok’s latest transparency report, reveal both the scale of harmful content on the platform and the company’s increasingly aggressive approach to policing what Nigerians see and share.

One of the most striking findings is how quickly TikTok now catches problematic content. In the first quarter of 2025, 88.2% of all removed videos had zero views. By the second quarter, that number improved slightly to 88.3%. This means nearly 9 out of 10 harmful videos were deleted before a single Nigerian user saw them.

This is largely thanks to TikTok’s automated moderation systems, which now handle the bulk of enforcement work. In the January-March period, AI-driven tools removed 3.1 million videos, while in April-June, they handled 3.27 million videos, consistently accounting for about 84-86% of all takedowns.

The speed is impressive too. In the first quarter, 73.8% of user reports were processed within two hours. By the second quarter, this improved to 77.8%. Today, only about 9% of reports take longer than eight hours to resolve.

For a platform that has faced criticism globally for slow responses during viral incidents, this marks a significant improvement.

What TikTok content gets removed most often?

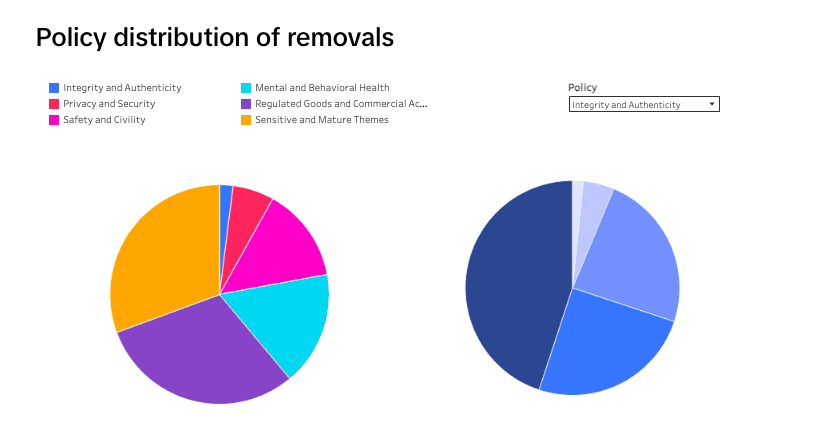

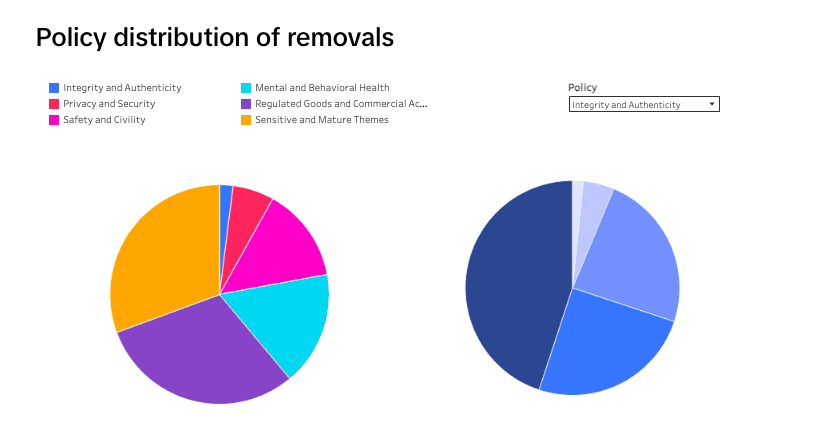

TikTok’s enforcement data shows that the majority of removals fall under a few key categories. Based on the policy distribution charts, the biggest violators globally, and likely in Nigeria, include:

- Safety and civility violations make up the largest share, accounting for issues like bullying, harassment, hate speech, and dangerous challenges. In Nigeria’s dataset, violations related to dangerous activities and challenges represented a significant portion of takedowns.

- Regulated goods and commercial activity is another major category, covering everything from illegal sales to promoting counterfeit products and unauthorised financial schemes. This category alone accounted for over 36% of removals in some quarters.

- Sensitive and mature themes include graphic violence, sexually explicit content, and disturbing imagery. Nigerian data shows that shocking or graphic content, as well as nudity and body exposure, consistently rank among the top violations.

- Youth safety remains a critical focus. Content involving minors, particularly youth sexual and physical abuse, saw some of the highest enforcement rates, with near-total proactive removal in most quarters.

Read also: Nigeria takes centre stage at TikTok’s West Africa Safety Summit

Scams and fake content are harder to stop

Despite strong overall numbers, TikTok’s systems still struggle with two specific types of harmful content: scams and AI-generated misinformation.

In the first quarter of 2025, content involving fraud and scams had a pre-view removal rate of just 44.4%, meaning more than half of scam videos were seen by users before being flagged and removed.

Similarly, AI-generated or edited media designed to deceive had a pre-view catch rate of only 46.6%.

Interestingly, some categories showed improvement in the second quarter. Hate speech enforcement strengthened, though other behavioural violations like disordered eating content saw weaker detection rates (dropping to just 19.8% pre-view removal in Q2).

Scam content also took longer to remove once detected. In Q1, it had a 24-hour removal rate of 61.8%, far below the 90-99% range seen in categories like youth safety, privacy violations, and graphic content.

This suggests that while TikTok’s AI excels at identifying traditional policy violations, like nudity, violence, or hate speech, it’s still learning to detect the more subtle, fast-evolving tactics used by scammers and creators of deepfakes or manipulated media.

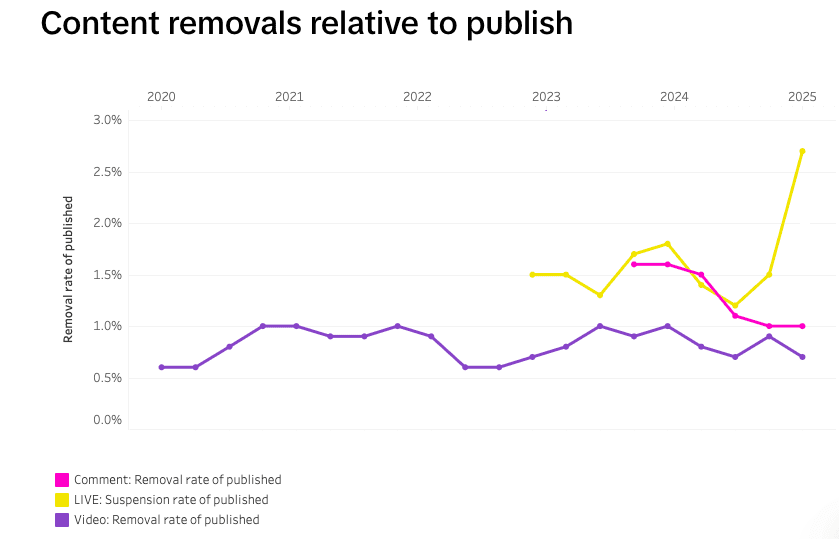

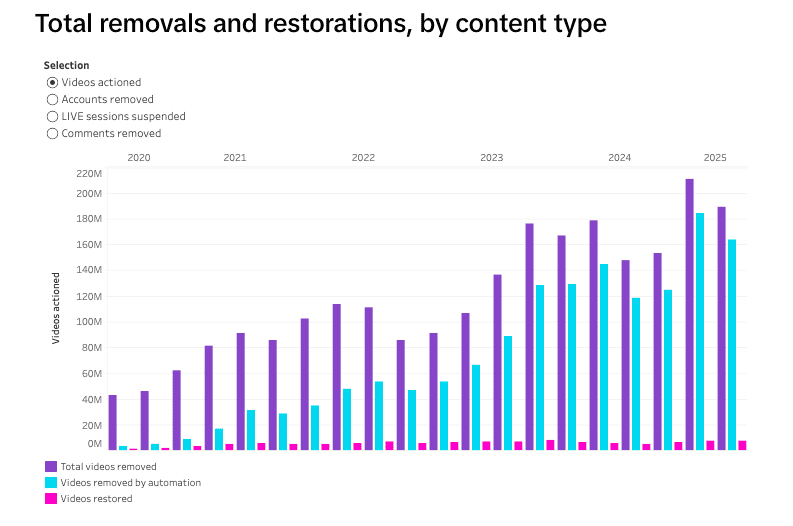

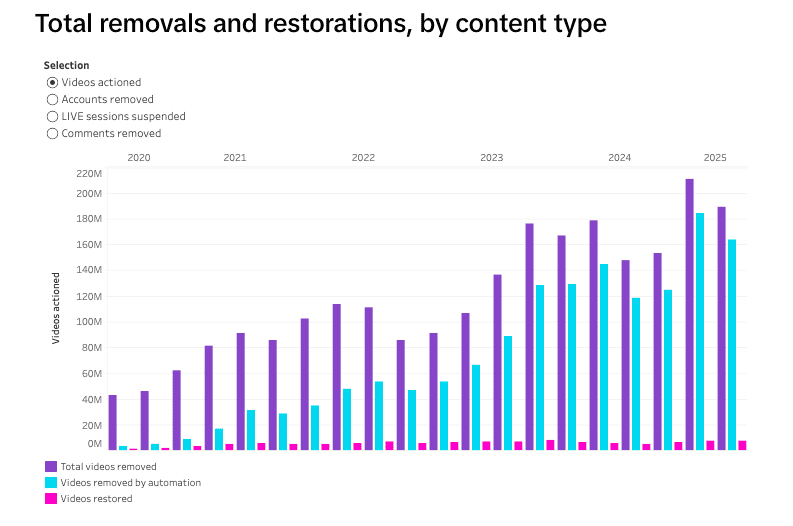

The transparency charts show a clear trend: TikTok is removing far more content now than it did just a few years ago. Global video removals climbed from under 50 million in 2020 to over 200 million by 2025. Automated removals followed the same trajectory, rising from under 20 million to nearly 180 million.

In Nigeria specifically, quarterly removals have steadily increased. From 859,458 videos in the third quarter of 2022 to 3.68 million in Q1 2025 and 3.78 million in Q2 2025, the volume of enforcement has more than quadrupled in less than three years.

At the same time, video restorations (cases where TikTok mistakenly removed content and later reinstated it) remain relatively low. In the first quarter of 2025, 173,554 videos were restored out of 3.68 million removals (4.7%). In the second quarter, 149,234 were restored out of 3.78 million (3.9%).

This suggests the platform’s automated systems are becoming more accurate over time.

What this means for Nigerian users

For everyday Nigerians scrolling through TikTok, these numbers mean two things. First, the platform is safer than it was a few years ago. Harmful content is being caught faster, often before it can spread or cause damage. Second, the system isn’t perfect, especially when it comes to financial scams and deceptive AI content, which remain real threats.

TikTok’s live session enforcement also saw significant action in Nigeria. In the first quarter of 2025, live broadcasts faced enforcement actions, and in the second quarter, 49,512 live sessions were banned for violating monetisation and safety guidelines.